Is Prompt Engineering Dead? How GPT-4 Is Changing the Game

Introduction

In the rapidly evolving world of artificial intelligence, staying ahead of the curve is crucial. One area that has garnered significant attention is prompt engineering—the art of crafting inputs to guide AI models like OpenAI’s GPT series to produce desired outputs. However, with the advent of OpenAI’s latest model, GPT-4, many are questioning the necessity of deep prompt engineering. Has GPT-4 rendered prompt engineering obsolete?

What Is Prompt Engineering?

Prompt engineering involves designing and refining prompts to elicit specific responses from AI language models. In earlier iterations like GPT-3, users often had to:

- Specify Detailed Instructions: Including phrases like “Never do this” or “Always remember to.”

- Use XML Tags: Wrapping content in tags to guide the model’s focus.

- Iteratively Test and Adjust: Running prompts multiple times and tweaking them for consistent results.

This meticulous process was essential to get precise and accurate outputs, especially in applications like content generation, code writing, and data analysis.

The Evolution of Language Models

Language models have come a long way since GPT-1. Each new version brought enhancements:

- GPT-2: Improved coherence and context understanding.

- GPT-3: Introduced few-shot learning, reducing the need for extensive training data.

- GPT-3.5: Further refined context handling but still required prompt adjustments.

Despite these advancements, the models often struggled with following complex instructions without detailed prompts.

How GPT-4 Changes Everything

Enter GPT-4, OpenAI’s most advanced language model yet. GPT-4 boasts enhanced reasoning capabilities and a deeper understanding of context, making it remarkably proficient at following straightforward instructions without the need for elaborate prompt engineering.

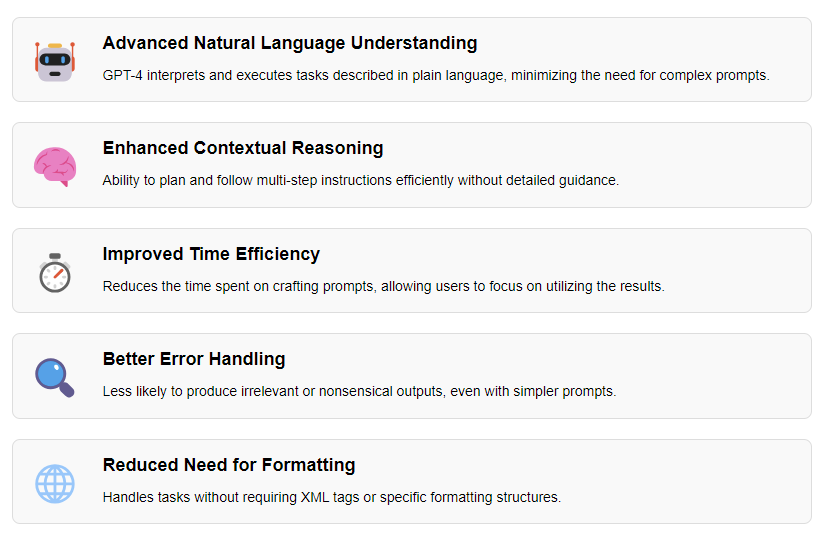

Key Improvements with GPT-4:

- Natural Language Understanding: GPT-4 can interpret and execute tasks described in plain language.

- Step-by-Step Reasoning: The model can plan and follow multi-step instructions efficiently.

- Reduced Need for XML Tags: While still useful in some cases, GPT-4 often doesn’t require wrapping inputs in XML tags to understand the task.

Simplifying Prompts: A New Approach

Previously, crafting prompts was a labor-intensive process. Users had to:

- Create Extensive Prompts: Sometimes exceeding thousands of words.

- Include Detailed Do’s and Don’ts: Specifying every minor instruction.

- Utilize Complex Formatting: Incorporating XML tags and specific structures.

With GPT-4, the approach to prompting has become significantly more straightforward:

- Concise Instructions: A simple, clear directive is often enough.

- Natural Language: Using everyday language without technical jargon.

- Minimal Formatting: Reducing or eliminating the need for XML tags and special structures.

Example: From Complex to Simple

Old Prompting Method:

You are to act as an expert content writer. Never include irrelevant information. Always format the output in JSON. Remember to avoid passive voice. The content should be engaging and informative. Use XML tags to wrap key points.

New Prompting Method with GPT-4:

Please read the following data and provide an engaging, informative summary in JSON format.

Here is the data...

The Impact on Various Industries

The shift in prompting methodology isn’t just a theoretical improvement; it has practical implications across multiple sectors:

- Content Creation: Writers can generate high-quality drafts with minimal prompt engineering.

- Software Development: Developers can obtain code snippets or explanations without detailed prompts.

- Data Analysis: Analysts can request complex data interpretations using simple language.

Why Prompt Engineering Is Becoming Less Critical

Several factors contribute to the decreasing importance of prompt engineering:

- Advanced Context Understanding: GPT-4 can infer user intent more accurately.

- Better Error Handling: The model is less likely to produce irrelevant or nonsensical outputs.

- Time Efficiency: Users spend less time crafting prompts and more time utilizing the results.

The Future of Prompt Engineering

So, is prompt engineering truly dead? Not entirely.

Ongoing Role:

- Specialized Tasks: Complex or highly specialized tasks may still benefit from detailed prompts.

- Fine-Tuning Models: For customized AI solutions, some level of prompt engineering may be necessary.

- Maximizing Output Quality: In competitive industries, slight improvements can make a significant difference.

However, for the average user and many applications, GPT-4’s advanced capabilities significantly reduce the need for intricate prompt crafting.

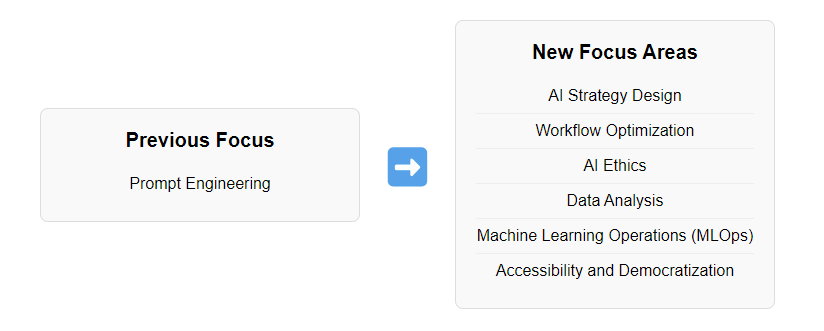

Adapting to the Changing Landscape

For professionals in the field:

- Focus on Strategy: Shift from prompt crafting to designing overall AI strategies and workflows.

- Learn New Skills: Explore areas like AI ethics, data analysis, and machine learning operations (MLOps).

- Embrace Accessibility: Use GPT-4’s user-friendly nature to democratize AI and involve more stakeholders.

Conclusion

GPT-4 represents a significant leap forward in AI language models, diminishing the necessity for complex prompt engineering. While the art of crafting prompts isn’t entirely obsolete, its role is undoubtedly changing. Professionals and enthusiasts alike should adapt to this new landscape, focusing on broader AI integration and strategic implementation.

Final Thoughts

The evolution of AI models like GPT-4 is making advanced technology more accessible than ever. As we embrace these changes, it’s essential to stay informed and agile, ready to pivot as the industry continues to innovate.